Local AI Playground

Discover Local AI Playground, the ultimate native app designed to revolutionize the way you experiment with AI models locally. This powerful tool removes the barriers to entry for AI experimentation, allowing you to conduct experiments without any technical setup or the need for a dedicated GPU.

Key Features and Benefits:

- Easy AI Experiments: Experiment with AI models hassle-free, eliminating technical complexities and dedicated GPUs.

- Free and Open-Source: Local AI Playground is both free and open-source, ensuring accessibility for all.

- Compact and Efficient: With a Rust backend, the app is memory-efficient and compact, boasting a size of under 10MB on various platforms.

- CPU Inferencing: Enjoy CPU inferencing capabilities that adapt to available threads, making it suitable for diverse computing environments.

- GGML Quantization: The tool supports GGML quantization with options like q4, 5.1, 8, and f16.

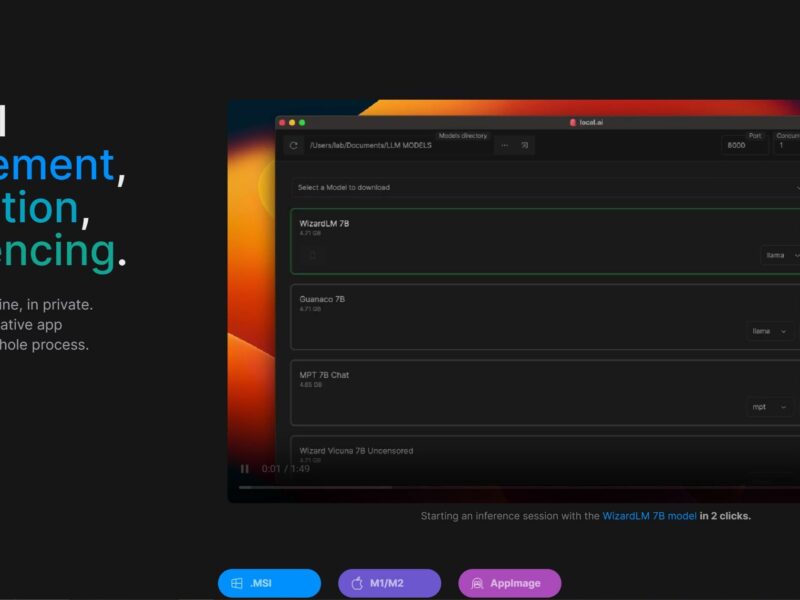

- Model Management: Manage AI models effortlessly with centralized tracking, resumable and concurrent model downloading, and usage-based sorting.

- Digest Verification: Ensure the integrity of downloaded models with robust digest verification using BLAKE3 and SHA256 algorithms.

- Inferencing Server: Start a local streaming server for AI inferencing with just two clicks. Includes quick inference UI, .mdx file writing, and more.

User Benefits:

- Seamless Experimentation: Conduct AI experiments locally without technical complications.

- Zero Cost: Enjoy the benefits of Local AI Playground without any financial burden.

- Efficient Resource Usage: The Rust backend ensures memory efficiency and a compact size.

- Adaptive Inferencing: Utilize CPU inferencing that adapts to available threads for various computing environments.

- Optimized Quantization: Benefit from GGML quantization options for efficient AI model handling.

- Simplified Model Management: Keep track of AI models effortlessly with centralized management and download features.

- Guaranteed Model Integrity: Digest verification ensures downloaded models’ integrity using advanced algorithms.

- Quick Inferencing Server: Start a local streaming server for AI inferencing with minimal effort.

Summary:

Local AI Playground redefines AI experimentation by providing a seamless, accessible, and efficient environment for local AI model testing. With features like CPU inferencing, GGML quantization, model management, and an inferencing server, this tool simplifies AI experimentation and management. Created to be user-friendly and open-source, Local AI Playground is the ultimate solution for those looking to experiment with AI models without the hassle of technical setup or dedicated GPUs.