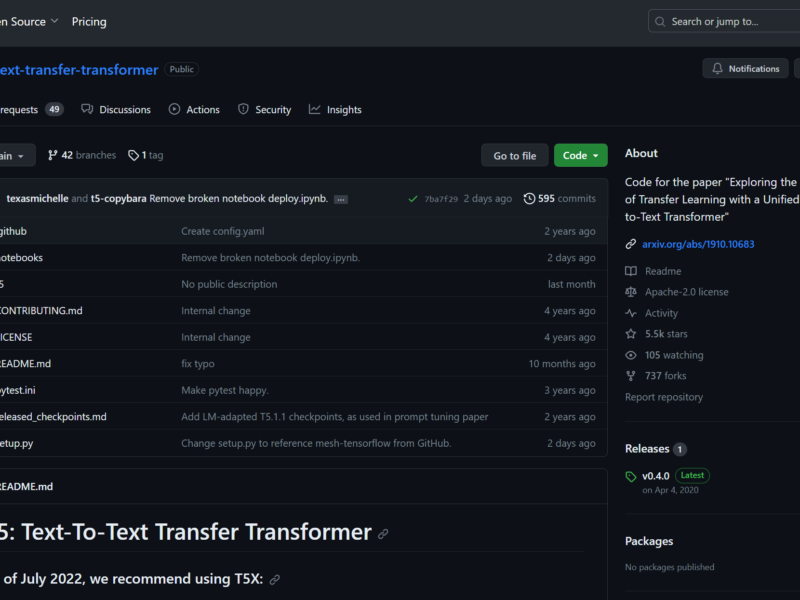

Google T5

Text-To-Text Transfer Transformer (T5) is a product of Google Research, designed to explore the limits of transfer learning. It is a unified transformer pre-trained on a large text corpus and can be used to achieve state-of-the-art results on multiple NLP tasks. The T5 library serves as code for reproducing the experiments in the project’s paper and can also be used for future model development by providing useful modules for training and fine-tuning models on mixtures of text-to-text tasks.

Key Features:

- t5.data: A package for defining Task objects that provide tf.data.Datasets.

- t5.evaluation: Contains metrics to be used during evaluation and utilities for applying these metrics at evaluation time.

- t5.models: Contains shims for connecting T5 Tasks and Mixtures to a model implementation for training, evaluation, and inference.

Usage:

- Dataset Preparation: It supports Tasks, TfdsTasks, TextLineTasks, and TSV files.

- Installation: Can be installed using pip.

- Setting up TPUs on GCP: Requires setting up variables based on your project, zone, and GCS bucket.

- Training, Fine-tuning, Eval, Decode, Export: Multiple commands are provided for these operations.

- GPU Usage: Supports GPU usage as well.

Use Cases:

- Reproducing Experiments: The T5 library can be used to reproduce the experiments in the project’s paper.

- Model Development: It can also be used for future model development by providing useful modules for training and fine-tuning models on mixtures of text-to-text tasks.

The code for T5 is open-source and available on GitHub under the Apache-2.0 license.